Last Updated on June 26, 2025

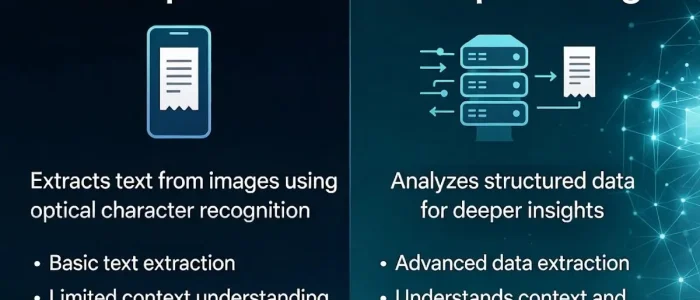

Tabscanner processes millions of receipts daily, which means its convolutional neural network (CNN) models need to continually improve to maintain exceptional accuracy and efficiency. With massive datasets and a commitment to constant model refinement, traditional single-machine training approaches fall short. Distributed training in TensorFlow provides the tools to scale model development using multi-GPU and

This post explores how distributed training techniques accelerate Tabscanner’s model development lifecycle, the challenges of massive training sets, and the constant pursuit of improvement.

Why Distributed Training?

Training deep learning models requires processing vast amounts of data and computing complex mathematical operations. For Tabscanner’s OCR pipeline, this includes:

- Massive Datasets: Tens of millions of receipt images with varying layouts, languages, and lighting conditions.

- Complex Models: CNNs with millions of parameters to accurately detect text, classify data, and extract line-item details.

Distributed training addresses these challenges by spreading the workload across multiple GPUs or TPUs, enabling:

- Faster Training: Parallelizing computations reduces the time required to train models.

- Scalability: Handle massive datasets by distributing data across nodes.

- Efficient Resource Utilization: Leverage the full power of modern hardware to process data in parallel.

TensorFlow’s Distributed Training Framework

TensorFlow offers a robust distributed training framework, tf.distribute, which simplifies the implementation of distributed strategies. This framework supports:

- Multi-GPU Training: Scaling across GPUs on a single machine or a cluster.

- TPU Training: Leveraging Tensor Processing Units (TPUs) for high-speed matrix computations.

- Cluster Training: Spanning multiple machines for large-scale distributed training.

Distributed Training Strategies in TensorFlow

- Mirrored Strategy

- Synchronizes data and model updates across multiple GPUs on the same machine.

- Example:

strategy = tf.distribute.MirroredStrategy() with strategy.scope(): model = build_model() model.compile(optimizer='adam', loss='categorical_crossentropy')

- Multi-Worker Strategy

- Distributes training across multiple machines (workers).

- Ideal for Tabscanner’s large-scale training needs, where datasets exceed the memory of a single machine.

- Example:

strategy = tf.distribute.MultiWorkerMirroredStrategy() with strategy.scope(): model = build_model()

- TPU Strategy

- Optimized for TPUs, which are purpose-built for matrix operations and enable training at lightning speed.

- Tabscanner uses TPU clusters for computationally intensive models.

- Example:

resolver = tf.distribute.cluster_resolver.TPUClusterResolver(tpu='') tf.config.experimental_connect_to_cluster(resolver) tf.tpu.experimental.initialize_tpu_system(resolver) strategy = tf.distribute.TPUStrategy(resolver)

How Distributed Training Benefits Tabscanner

- Faster Training on Massive Datasets

- Problem: Tabscanner’s dataset contains millions of images. Training on a single GPU takes weeks.

- Solution: Multi-GPU and TPU setups split the data into smaller batches, enabling parallel processing.

- Outcome: Training times are reduced from weeks to days, enabling faster iterations.

- Improved Model Performance Through Constant Iteration

- Regular updates to OCR models are essential to handle new receipt layouts, languages, and edge cases.

- Faster training cycles mean Tabscanner’s data scientists can experiment with architectures, hyperparameters, and augmentations more frequently.

- Handling Imbalanced Datasets with Smart Sharding

- Distributed training ensures balanced distribution of rare receipt types (e.g., non-standard formats) across GPUs or TPUs.

- Smart sharding ensures every batch benefits from diverse training data, improving generalization.

- Scaling with Data Growth

- As Tabscanner grows, the volume of data and the complexity of models increases.

- Distributed training allows seamless scaling to accommodate additional GPUs or nodes without significant code changes.

Challenges of Distributed Training and Solutions

- Synchronization Overhead

- Training across multiple devices requires synchronizing gradients, which can slow down progress.

- Solution: TensorFlow’s All-Reduce algorithm efficiently combines updates across devices, minimizing latency.

- Data Loading Bottlenecks

- Loading massive datasets can become a bottleneck.

- Solution: TensorFlow’s

tf.dataAPI enables parallelized data loading and preprocessing to keep devices busy.

- Fault Tolerance

- Distributed systems are prone to node failures.

- Solution: TensorFlow checkpoints allow Tabscanner to resume training from the last saved state without re-training from scratch.

Real-World Impact for Tabscanner

- Accurate OCR Across Diverse Conditions

Distributed training enables Tabscanner to train large CNNs capable of handling diverse conditions like poor lighting, varying fonts, and complex layouts. - Faster Model Updates

With distributed training, Tabscanner’s data science team can incorporate new features and address customer-specific requirements more rapidly. - Cost Efficiency

By using distributed training with TPUs, Tabscanner achieves faster results while optimizing computational costs, especially for large-scale experiments.

Distributed Training Workflow Example

Here’s how Tabscanner uses TensorFlow’s distributed training to improve its OCR models:

import tensorflow as tf

# Define a distributed strategy

strategy = tf.distribute.MirroredStrategy()

# Prepare the dataset

def preprocess_data(image, label):

# Resize and normalize images

image = tf.image.resize(image, (128, 128))

image = image / 255.0

return image, label

dataset = tf.data.Dataset.from_tensor_slices((image_paths, labels))

dataset = dataset.map(preprocess_data).batch(32).prefetch(tf.data.AUTOTUNE)

# Define the CNN model

def build_model():

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape=(128, 128, 1)),

tf.keras.layers.MaxPooling2D((2, 2)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

return model

# Train the model with distributed strategy

with strategy.scope():

model = build_model()

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['accuracy'])

model.fit(dataset, epochs=10)

Conclusion

Distributed training in TensorFlow revolutionizes Tabscanner’s approach to building OCR models. By leveraging multi-GPU and TPU architectures, the team can:

- Train models faster.

- Handle massive datasets efficiently.

- Continuously improve performance to meet customer needs.

As Tabscanner continues to innovate, distributed training ensures scalability and adaptability, making it possible to deliver cutting-edge receipt processing solutions at scale. 🚀

Want to learn more about TensorFlow distributed training? Check out the official TensorFlow documentation.